INFRASTRUCTURE

Building a Multi-Tier AI Agent System

How we designed a secure, tiered AI bot infrastructure for DevOps/SRE teams with role-based access control

The Challenge

Modern DevOps and SRE teams need AI assistance that goes beyond simple chatbots. We needed a system that could:

- Help developers with infrastructure queries, deployments, and debugging

- Provide read-only public access for service status and documentation

- Enable authenticated team members to perform infrastructure operations

- Maintain strict security boundaries to prevent prompt injection and privilege escalation

- Scale across multiple communication channels (web, Slack, Discord)

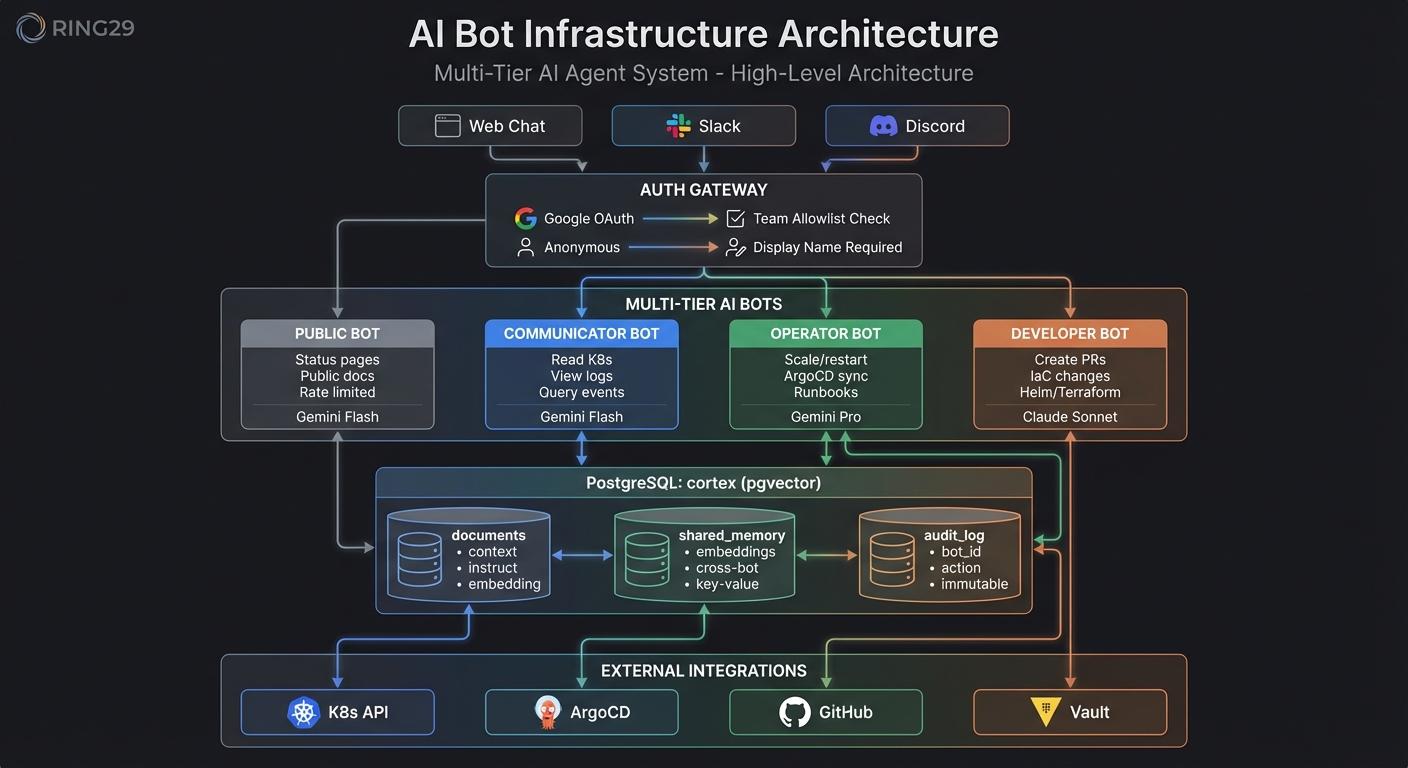

Architecture Overview

The system uses a tiered approach where each bot has specific capabilities and access levels. All bots share a common knowledge base through PostgreSQL with pgvector for semantic search.

The Four Bots

Public Bot

Anonymous access, minimal permissions

- • Service status and uptime information

- • Public documentation and FAQs

- • No access to internal infrastructure

- • Rate limited (10 requests/minute)

- • Uses Gemini Flash for fast responses

Communicator Bot

Authenticated team members, read-only access

- • Read pod status, deployment info, events, logs

- • Query Kubernetes resources across namespaces

- • View ArgoCD application status and history

- • Cannot modify any resources

- • Uses Gemini Flash for fast responses

Operator Bot

Infrastructure operations, write access

- • Scale deployments, restart pods

- • Trigger ArgoCD syncs and rollbacks

- • Execute runbooks and remediation scripts

- • Requires human approval for destructive actions

- • Uses Gemini Pro for complex reasoning

Developer Bot

Code changes, PR creation

- • Creates branches and commits for IaC changes

- • Opens pull requests with proper descriptions

- • Generates Terraform, Helm, and K8s manifests

- • Cannot approve or merge its own PRs

- • Uses Claude Sonnet for code generation

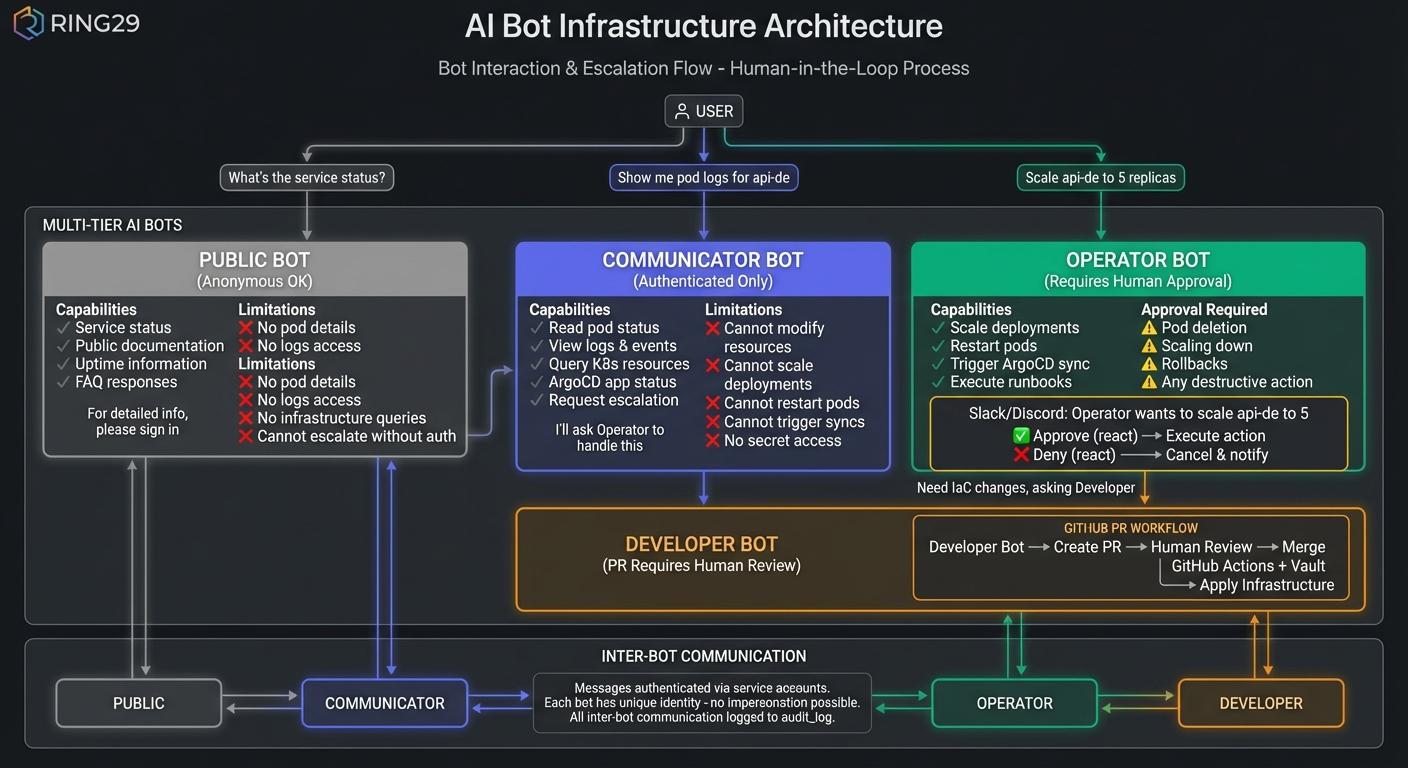

Bot Interaction & Escalation Flow

Requests flow through the bot hierarchy with human-in-the-loop approval for destructive actions:

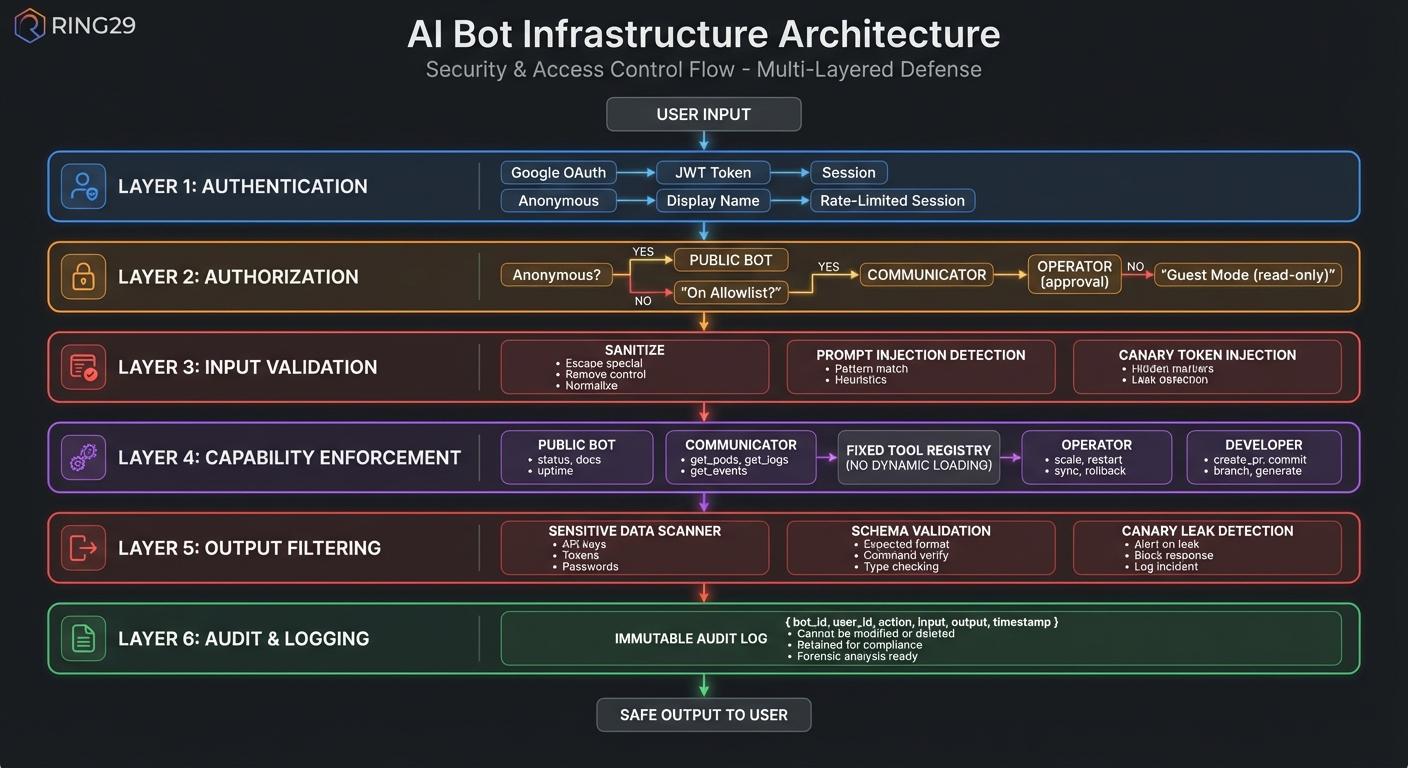

Security Model

Security is enforced at multiple layers:

Critical Restrictions (All Bots)

Database mutations follow a strict flow: Developer creates PR → Human reviews → Merge to main → GitHub Actions with temporary Vault credentials → Database migration.

Web Chat Integration

The web chat provides two access levels for DevOps/SRE workflows:

Authenticated Team Members

- • Google OAuth login required

- • Email must be on team allowlist

- • Full Communicator bot access

- • Can query infrastructure status

- • Can trigger inter-bot messaging for operations

Public Users

- • Display name required (for rate limiting)

- • Limited to Public Bot only

- • Service status and uptime info

- • Public documentation queries

End-User Use Cases

Beyond internal DevOps workflows, this architecture can be adapted for customer-facing scenarios:

Status Page Bot

Allow customers to query service status, incident history, and maintenance schedules through natural language instead of navigating a status page.

Documentation Assistant

Help users find relevant documentation, API references, and troubleshooting guides using semantic search over your knowledge base.

Support Triage

Pre-qualify support requests, gather diagnostic information, and route issues to the appropriate team before human intervention.

Kubernetes RBAC

Each bot runs with a dedicated service account with minimal permissions:

| Resource | Communicator | Operator | Developer |

|---|---|---|---|

| pods (get, list, logs) | ✓ | ✓ | ✓ |

| pods (delete) | ✗ | ✓ | ✗ |

| deployments (patch, scale) | ✗ | ✓ | ✗ |

| exec into pods | ✗ | ✓ | ✗ |

| secrets | ✗ | ✗ | ✗ |

Shared Memory with pgvector

All bots share context through a PostgreSQL database with pgvector extension for semantic search:

-- Documents table for context files CREATE TABLE documents ( id UUID PRIMARY KEY, name TEXT NOT NULL, content TEXT NOT NULL, embedding vector(1536), updated_at TIMESTAMPTZ DEFAULT NOW() ); -- Shared memory for cross-bot knowledge CREATE TABLE shared_memory ( id UUID PRIMARY KEY, key TEXT NOT NULL, value JSONB NOT NULL, embedding vector(1536), created_by TEXT NOT NULL, created_at TIMESTAMPTZ DEFAULT NOW() ); -- Immutable audit log CREATE TABLE audit_log ( id UUID PRIMARY KEY, bot_id TEXT NOT NULL, action TEXT NOT NULL, details JSONB, created_at TIMESTAMPTZ DEFAULT NOW() );

Deployment with Helm

Each bot is deployed using a universal Helm chart with per-bot value overrides:

# Deploy Communicator helm upgrade --install communicator ./chart \ --namespace ai-agents \ --create-namespace \ -f values-communicator.yaml # Deploy Operator helm upgrade --install operator ./chart \ --namespace ai-agents \ -f values-operator.yaml # Deploy Developer helm upgrade --install developer ./chart \ --namespace ai-agents \ -f values-developer.yaml

Security: Defending Against Prompt Injection

AI agents with infrastructure access are high-value targets. We implement defense-in-depth against prompt injection and other attacks:

Prompt Injection Mitigations

- Input Sanitization: All user inputs are sanitized before being passed to the LLM. Special characters and control sequences are escaped.

- System Prompt Isolation: System prompts are cryptographically signed and verified. User input is clearly delimited and cannot override system instructions.

- Output Validation: Bot responses are validated against expected schemas. Commands are parsed and verified before execution.

- Canary Tokens: Hidden tokens in system prompts detect extraction attempts. Alerts trigger on any canary leakage.

Privilege Escalation Prevention

- Capability-Based Security: Each bot has a fixed set of tools. The LLM cannot invoke tools outside its capability set.

- No Dynamic Tool Loading: Tool definitions are static and compiled. Bots cannot be tricked into loading new capabilities.

- Cross-Bot Isolation: Bots cannot impersonate each other. Inter-bot messages are authenticated with service accounts.

- Human Approval Gates: Destructive operations require out-of-band human approval via Slack/Discord reactions.

Data Exfiltration Prevention

- No Secret Access: Bots cannot read Kubernetes secrets or Secret Manager. Credentials are injected at runtime via Workload Identity.

- Output Filtering: Responses are scanned for sensitive patterns (API keys, tokens, passwords) before delivery.

- Rate Limiting: Aggressive rate limits prevent bulk data extraction attempts.

- Audit Logging: All queries and responses are logged for forensic analysis.

Indirect Injection Defense

- Content Boundaries: External data (logs, events, documents) is clearly marked as untrusted content.

- Retrieval Filtering: RAG results are sanitized before inclusion in prompts.

- Limited Context Windows: Bots only see relevant context, reducing attack surface from poisoned data.

Key Takeaways

Least Privilege: Each bot only has the permissions it needs. No bot can access secrets directly.

Human in the Loop: Destructive operations require human approval. Bots cannot approve their own changes.

Shared Context: All bots share knowledge through a common database, enabling consistent responses across channels.

Audit Everything: Every action is logged to an immutable audit table for compliance and debugging.

Try It Out

Head back to the homepage to interact with our AI assistant. Authenticated team members get full access to the Communicator bot for DevOps queries, while public users can check service status and documentation.

Talk to the Bot →